AI has entered the chat

It’s too early to know if it will ultimately bring promise or peril, but artificial intelligence is here.

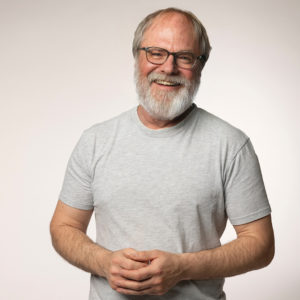

John Kuiphoff AI portrait

The first paragraph of any story is meant to beckon you into reading the rest. So how should I start this one?

I could tell you about the 14 suspiciously similar papers that students submitted to Professor James Stacey Taylor’s philosophy classes soon after the debut of ChatGPT. Or about how Professor Sejong Yoon checked to see if one of the assignments in his advanced class on artificial intelligence was challenging enough by first asking the chatbot to complete it. Or about the syllabus that Professor John Kuiphoff ’04 asked it to write for one of his classes in the design and creative technology department.

Or maybe, since Kuiphoff finds ChatGPT so useful that he always keeps it open on his computer screen, I could ask him to ask its opinion.

“What should the first paragraph of this article be?” Kuiphoff types. And this is what ChatGPT, an artificial intelligence tool, answers:

“Artificial intelligence is transforming the landscape of higher education, and The College of New Jersey is proactively embracing this technological revolution. By adopting a comprehensive and forward-looking approach, TCNJ aims to maximize AI’s potential across various disciplines, fostering interdisciplinary collaborations, innovation, and enhanced learning experiences.”

It goes on for another long and unenlightening sentence, in the voice of a confident but unprepared student padding a term paper with buzzwords and platitudes and trying to hit a page count by enlarging the typeface and narrowing the margins.

“Everything feels a little bit like eating at Applebee’s,” says Kuiphoff about the chatbot’s work. “It’s good, but it’s not memorable.”

ChatGPT was so startling when it appeared last fall because it made the power of AI — its ability to almost instantaneously explore, synthesize, and communicate vast galaxies of information — available to mere civilians in a way it never had been before. And the forecasts about its impact have ranged from the messianic to the apocalyptic: AI will save the world; or maybe it will ruin it.

Applebee’s prose or not, the chatbot was broadly correct when it answered Kuiphoff: AI certainly is transforming the landscape of higher education. Exactly how, though, it couldn’t say — at least not yet.

- James Stacey Taylor / Images: Prequel, Facetune

So let’s go back to those 14 philosophy papers. In the fall semester, Professor Taylor gave a series of prompts for a short essay assignment to the 163 students across his Introduction to Philosophy and Contemporary Moral Issues classes. “Some of them were very weak papers,” Taylor says. “And when I saw several with the same structure, the same content, it was pretty clear what was going on.”

He did what he suspected his students had done: put his prompts into the chatbot. “It came back with papers that were essentially similar to the students’ papers, identical in some cases,” he says.

Was this the start of the plague of plagiarism that educators feared ChatGPT would unleash? Would the school prohibit the use of chatbots? “That would be like banning the internet,” says Taylor, who also happens to be the college’s chief academic integrity officer.

The cluster of copied papers in his own classes did not metastasize elsewhere on campus. “Other cases using ChatGPT have appeared, but I think that there are far fewer than you might have expected in November,” he says, marking the month the chatbot was introduced. “It became clear that this is pretty easy to detect.”

Those 14 students got zeros for their assignments, but they revealed the chatbot’s weak spots. Chatbots are adept at multiple-choice tests, and can plausibly respond to broad prompts. But if professors ask students to reflect on last Tuesday’s class discussion or a student’s personal experience, AI will be flummoxed.

TCNJ has not yet made any institutional changes in response to AI. “We don’t need to jump right to our academic integrity policy and add a new section on cheating by using AI,” Provost Jeffrey Osborn says. “The opportunity for the college is to determine how we can leverage this technology in both an educational and operational context. It is incumbent on us to design course experiences and methods to evaluate student learning with the knowledge that ChatGPT and other forms of AI are here to stay.”

A broadly representative task force is forming to look at the larger questions — about how to assess student work; to expand and enhance teaching strategies; and to ensure the integrity of faculty research. “Higher education is much more than just content,” says Osborn. “It’s much more than producing X number of papers or products or deliverables. It’s about learning how to think critically. It’s about learning how to synthesize, how to experience different perspectives, and reach a new level of understanding as a result of that.”

But AI is moving so fast it may be hard to keep up — a pace that has surprised even Professor Yoon in the computer science department, whose work in the field started 15 years ago, when he was a graduate student helping AI applications better identify malignant tumors on mammograms. “Previously, we could imagine what’s possible and what’s not possible on, say, a three-year or five-year horizon,” he says. “But now, at the speed of development these days, even a six-month or one-year horizon would be really challenging to predict.”

The hardware and the vast expansion of computing power has made this possible. It is also, as Yoon emphasizes, the theoretical foundations and algorithms that increasingly work more like the human brain. “The dystopian ‘Terminator’ — that’s not going to happen,” he says. “But the potential problem is with the humans who are using them.”

Humans, Yoon worries, who use AI as an accomplice for cybercrimes, for instance. Or humans, as Professor Kevin Michels worries, who use it to spread lies cloaked as truth.

“I’m increasingly taken by its power, and increasingly concerned by some of the implications,” says Michels, the founding director of the business school’s Center for Innovation and Ethics. “If you ask a chatbot about a historic event or scientific claim, it may draw from a vast array of statements, not all of which are credible,” he says. “And how do we distinguish true from false in an answer that is authoritative looking?”

New technology, Michels notes, often brings dangers alongside benefits: Cars moved us quickly but left carnage in our roads. “The history of technological introduction tells us that we would do well to reflect before we deploy,” he says. “But AI is like an arms race now, and every company is going to do everything they can to get out the next iteration, so there’s not going to be a lot of deliberation before release.”

So can AI be a partner instead of an adversary? As a high school chess team veteran, Michels sees a hopeful model in the aftermath of chess champion Garry Kasparov’s 1997 loss to a computer. “One of the unsung stories is that over time we discovered that while a machine can beat the best player on the planet, the best player on the planet, coupled with a machine, is actually better than the machine,” he says.

Working together — that’s a strategy he’s trying in his Innovation class this semester, asking students to first use the chatbot to teach themselves about five different technologies. “And then phase two is to talk about how those technologies could be brought together in an interesting way to create something that’s valuable in the world,” he says.

- Kevin Michels / Images: Prequel

“I think it’s critical for us in the higher education community to ask foundational questions about what education should look like in light of the power of these tools and their extrapolated future,” Michels says. “Could AI be individualized in a way that could make it tremendously valuable as a teacher? Is this the great democratization of learning?”

Kuiphoff thinks so. “I think it could force higher education to be better,” he says. He has always worked at the far edge of technology, using computers to make actual things — he held up an engraved brass piece for a 3D printed drum set as an example — but after he spent a feverish week while housebound with COVID-19 over winter break testing what ChatGPT could do, he speaks of it with an evangelical fervor. He uses it to code programs, to help him write articles, to generate dozens of design prototypes for objects he’s making, and he envisions students using it for what he calls decentralized self-directed learning.

“When I really got to intimately work with these technologies and push their boundaries to the limit, I realized that people just don’t understand how powerful this is and how much is going to change within the next couple of years,” he says. “This might be bigger than the internet itself.”

But that syllabus he asked ChatGPT to write for him? He ended up not using it. “It was a better syllabus than I could ever write, but it wasn’t mine,” he says. It gave him some direction and ideas and readings, which he then adapted into something that was his own. “I like my syllabus to be a little less syllabus-y.”

I turn to Kuiphoff and ChatGPT again to help conclude the story, to launch readers into their own round of further inquiry and contemplation. Kuiphoff types: “What should the last paragraph be?”

“In conclusion TCNJ’s proactive approach to embracing AI is an essential step towards shaping the future of higher education and ensuring its students are well-prepared for an increasingly AI-driven world.”

It goes on for two more word-salad sentences, still sounding like a procrastinating student who has run out of both caffeine and ideas. Not much of an ending, so let’s try another question: How will it answer once it has read this story — or, more precisely, ingested the information in it? Will it sound more intelligent, more insightful, more human? Will it give us even more occasion to hope, or to fear, or both?

Altered images

To illustrate this story, we asked John Kuiphoff ’04, professor of design and creative technology, to produce portraits of the subjects using artificial intelligence applications. We provided him with several photos of each person, shot against a white background in various poses and angles. He explains what he did here:

APPS USED

FaceApp, Facetune, and Prequel

HOW’S IT WORK?

You upload 10–20 photos to the app and the software generates a collection of avatars. Facetune and Prequel generate random backgrounds and features like hair color. You can choose from theme categories such as mythical creatures, gothic, and soft glam. The software isn’t sophisticated enough yet to add user controls.

THE PROCESS AGED YOU

My image was created with FaceApp. On that app you can alter features such as age, glasses, and beard length.

ONE NOT LIKE THE OTHERS

Sejong Yoon, computer science professor, was adamant that he did not want photos of himself put through an AI tool.

THE LIMITATIONS

I generated about 600 photos of each person; most were not very good. Many look distorted and weird. I got one or two good avatars out of every 100 images generated.

Pictures John Emerson and Peter Murphy

Posted on June 12, 2023